Data security breaches often begin with a moment of human vulnerability under pressure or perceived authority, says Mark T. Hofmann, a crime and intelligence profiler and business psychologist who researches hackers’ methods on the Darknet. In an interview with ICT&health Global, he explains why cybercrime is primarily a psychological challenge, how attackers exploit human behavior, and why healthcare organizations are especially at risk.

What do you do in practice as a criminal and intelligence analyst and organizational psychologist?

My approach is risky but effective. I go where others don’t look, into the Darknet, hacker forums, Telegram, and leaked chat logs like the Conti leaks. In practice, I combine behavioral analysis with direct research on the Darknet and interactions. I try to analyze hackers first-hand: Who are they? How do they think? What drives them? How do they organize? The goal is always the same: I learn from the “bad guys” to show organizations and authorities how to protect themselves. I focus on the human side of cybersecurity, such as awareness and cyber-profiling. At first glance, you might think cybercrime is a technical problem, but on the side of the victims and hackers, there is a lot of psychology involved. That’s my focus.

From your profiling work with hackers and cybercriminals, what psychological traits show up most often? What should everybody know about their motives?

Hackers are often young, highly intelligent, and well-educated, with a strong desire to challenge the system. Many I would describe as “digital anarchists” because they enjoy the cat-and-mouse game with authorities and are proud of their skill set. Money is often just the first motivator; the thrill, the challenge, the desire to solve the “puzzle,” and prestige matter just as much. Even hackers who already have sufficient money continue their activities; it’s not just about money; it’s about status, power, attention, and mastery of systems. I have identified a wide range of motives over time; they are not all the same, but this pattern is clear.

Why do people keep falling for social engineering attacks despite years of awareness campaigns?

Cybercrime is not a technical problem. It’s a psychological problem. Attackers always use the same triggers: emotion, time pressure, and unusual requests. Emotion may be the most important one. This works because humans react reflexively before thinking. Awareness alone often isn’t enough when stress, routine, or surprise comes into play. Even experienced people can be deceived, especially when trust is implied through voice, authority, or urgency. Imagine you get an emergency phone call from your daughter with your daughter's actual voice. With deepfake technology, that’s possible. And yes, this can be damn convincing.

From a psychological point of view, what makes healthcare professionals and administrative staff especially vulnerable to social engineering? Which everyday behaviors in healthcare organizations create the highest cyber risk?

Healthcare staff operate under high stress and responsibility. Doctors, nurses, and administrative personnel act quickly and under pressure. Attackers exploit this with social engineering: a brief call, email, or message that creates urgency, and rational thinking shuts down. Typical risks include leaving laptops unattended, sharing passwords on a phone or writing them on Post-it notes, opening attachments without verification, or discussing patient information loudly in public areas. Every minor routine error can become a gateway for attackers. One night, I was walking down the corridors of the Charité Berlin, and I had a long waiting time. The number of computers I saw unlocked and unsupervised was quite shocking.

Humans are the weakest link in cybersecurity. Which cognitive biases are attackers exploiting most effectively?

The main levers are emotion, urgency, and sometimes the authority principle: “Here is the police”, “There is a problem with your bank account, we need to act fast”. Especially when someone tries to create pressure to make us do something unusual, like revealing passwords or transferring money, we should take a breath, slow down, and make a quick reality check: Would my bank call me and ask for the password? Would the tax authority call me? Would my son ask for money on the phone? Think before you act and listen to your gut feeling.

What does “behavioral profiling” actually add to cyber defense that technical tools cannot?

Know your enemy. I like to describe cybercrime like a game of behavioral chess. The more we understand the mind, motives, and methods of hackers, the better we can defend and prepare. If we understand now how hackers use AI and deepfakes and where this trend is going, we can prepare.

There are behaviors that technical security measures address. If you reveal a password on a phone because someone claims to be the “IT support” and there is an emergency, what can your firewall or technical security team do about it? I am not opposed to technical security measures. Long, varied passwords, firewalls, network security, and multi-factor authentication are essential. But we need both a technical and a human firewall. Secure your systems and train your people.

What does “human firewall” exactly mean?

A “human firewall” means people in the organization aren’t the weak point; they are the first line of defense. It’s about awareness, critical thinking, routines, and a security culture. My motto as a keynote speaker is “Make Cybersecurity great again.” I think we make this stuff sound too boring, and people hate cybersecurity awareness training. But it does not have to be this way. A journey into the darknet can be engaging, like a Netflix movie, and at the same time, teach people how to protect themselves.

Is there clear guidance on how to create an organizational culture to minimize cyber risk?

I have two main principles to truly inspire people. First, make Cybersecurity Great again: I aim to make it as engaging as a true-crime series (which it is actually) and entertaining at the same time. After my first TEDx talk on the “Psychology of Cybercrime,” someone said afterwards: “That was entertaining, but do you really think that a deadly serious topic like Cybercrime should be entertaining?” No, it should not be entertaining; it must be entertaining. It is the only way to reach people who are not interested in these IT and Cybersecurity topics. And isn’t that the main target group?

Second, make it about people and not just about business. We all care about our loved ones and our own well-being. I always try to connect personal risks and business risks: What “CEO-fraud” is in business, is the “grandparent scam” in private. Same modus operandi. If you make it about people and not just about business, they will listen. Especially in hospitals, it’s not (just) about money, but lives.

With AI, deepfakes, and voice cloning on the rise, how are psychological attack patterns changing? In the daily stress and time pressure of healthcare, is it realistic to build a human firewall against phone phishing, which is hard to detect?

The German Federal Police (BKA) warns that AI could increase the quantity and quality of cyber attacks. AI and Deepfakes are elevating social engineering to a new level. A few seconds of recorded voice, a short video, or even a voicemail is enough to create a convincing clone. This makes CEO fraud, impersonation, and identity attacks dramatically more effective. In healthcare, the combination of time pressure, stress, and multitasking makes detection extremely hard. Yet a human firewall is realistic if staff are trained to pause, verify, and follow established routines – call the real number, ask verification questions, or use pre-agreed code words. Awareness, combined with culture and routine, remains the most effective defense.

Which issues should cybersecurity teams understand better about human behavior to stay ahead of attackers?

Let me list 3 main things:

- Humans react reflexively under emotion and stress. Attackers systematically exploit this, and AI will increase both the quantity and the quality of these attacks.

- Authority and urgency are powerful levers; even highly trained people can be deceived.

- Most cyber attacks start with human error, but humans can also be the strongest defense if trained, motivated, and empowered. Make it about people. Make it entertaining and exciting.

If you had to give hospital management three psychological priorities to improve cybersecurity, what would they be?

If I had to boil it down for hospital management, I’d say the first priority is to stop thinking of cybersecurity as a purely technical issue and start treating people as the real first line of defense. That means building and maintaining a strong human firewall through continuous awareness, clear routines, and a culture where security is part of everyday work, not an occasional training slide deck.

The second priority is to normalize verification. Staff should feel encouraged to pause, double-check, and verify before acting, even under pressure. Multi-factor authentication, call-backs, or simple code-word procedures should be seen as supportive safety nets, not as signs of mistrust or bureaucracy.

And finally, it’s crucial to help staff understand the psychology of attackers. When people learn why attacks work, how urgency, authority, and fear are deliberately used, and which cognitive biases are exploited, they become much better at spotting red flags and resisting manipulation in real-world situations.

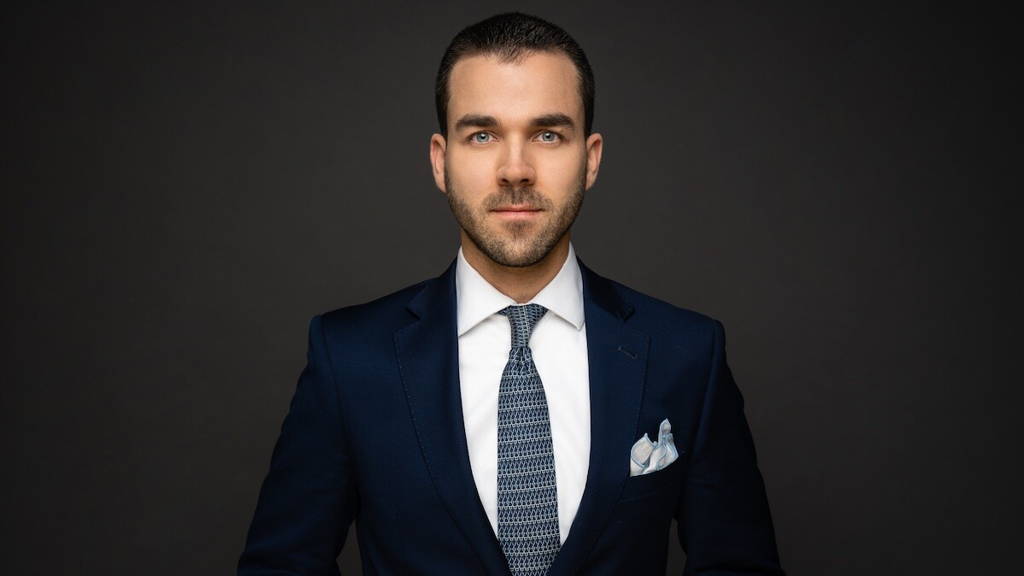

Mark T. Hofmann is a Crime- & Intelligence Analyst ('Profiler') and Business Psychologist (M.A.). He studies hackers on the Darknet to gain an insider perspective. He is a well-known cyber profiler known through international television and streaming series (CNN, CBS, Forbes, 60 Minutes Australia). As a Keynote Speaker, he inspires audiences worldwide by discussing the Psychology of Cybercrime and the Dark Side of AI. Website: www.mark-thorben-hofmann.de/en/cybercrime